|

|||||||||||||||||||

|

|

|||||||||||||||||||

|

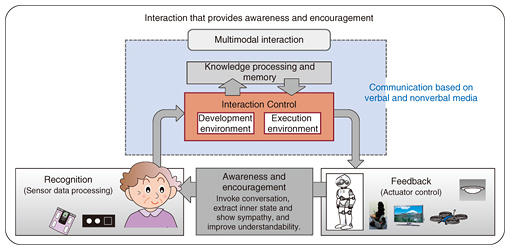

Feature Articles: Artificial Intelligence Research Activities in NTT Group Vol. 14, No. 5, pp. 15–20, May 2016. https://doi.org/10.53829/ntr201605fa3 Cloud-based Interaction Control Technologies for Robotics Integrated Development Environment (R-envTM)AbstractCommunication robots are able to detect the surrounding situation through cameras or sensors, and they use motion to show non-verbal expressions. Furthermore, automobiles and personal devices such as vacuum cleaners are being robotized with the application of verbal communication technology. In the near future, it will become very important for various robots to be able to connect to and work together with devices that surround us in order to support human activities and enhance our lives. This article introduces R-envTM, a cloud-based Interaction Control technology. Keywords: multi-modal interaction, cloud robotics, networked robot 1. History of robots and artificial intelligenceThe word robot was reportedly first used by Czech playwright Karel Čapek in his 1920 science fiction play “Rossum’s Universal Robots” [1]. The robot’s role in the play was to replace some human labor. Čapek’s robot would exert physical power or intellectual power far beyond that of humans according to the task intended. In one scene of the play, a highly intellectual robot with conscious awareness communicates with humans. In the literary and theatrical world, robots demonstrate human-like physical and/or intellectual capabilities that in some cases exceed those of humans. The role of robots in society is a question often asked since the early days of the introduction of robots. In the technology field, robots and artificial intelligence (AI) mutually influence each other’s development. Some key events in the development of robots include the establishment of the Robotics Society of Japan in 1983 and the first International Conference on Robotics and Automation in 1984 organized by the Institute of Electrical and Electronics Engineers (IEEE). In 1986, the Japanese Society for Artificial Intelligence was established, and in 1988, NTT launched the Human Interface Laboratory Intelligent Robot Research Project with a mission to introduce robot control technology into production sites in Japan. Thus, research commenced on computer vision, motion control, and methods of training robots to understand the goals of the work assigned to them, otherwise known as robot-teaching technology [2]. Technological development has been centered on industrial robots until fairly recently. However, research on the interaction between humans and robots has advanced a great deal and is now quite active. In 1991, IEEE established RO-MAN (International Symposium on Robot and Human Interactive Communication) to focus on communication between humans and robots. In 1999, Sony Corporation launched AIBO, a dog-type pet robot, which made it possible for the general public to actually see and experience high level communication robots that until then had been limited to research laboratories. AIBO’s algorithms and sensors enable it to understand the intention of users and to express its reactions by using light, sound, and motion [3]. Honda Motor Co., Ltd. in 2000 announced ASIMO, a humanoid robot that was able to walk like a human [4]. 2. NTT’s development of robotic technologyNTT began researching and developing a network robot platform to permit the collaboration of multiple robots over the network. Part of this work was conducted under contract from the Ministry of Internal Affairs and Communications (MIC) of Japan as “Comprehensive research and development of network-human interface (network robot technology)” from 2004 to 2008. Network robot platform technology research was conducted with one aim being to realize a novel networked society where various services could be used anywhere, any time by connecting sensors, appliances, and robots, thereby enabling them to work in collaboration over a highly advanced network [5]. Three types of robots were developed: (1) A tangible type of humanoid robot called a visible robot (2) A virtual robot, which was a software agent on appliance software (3) An unconscious robot consisting of sensors in the surrounding environment We also developed a mechanism enabling these different types of robots to be connected and to work in collaboration by exchanging necessary information over the network. To enable various kinds of information to be exchanged between different types of robots manufactured by different companies, we devised a description method to handle core information related to the user (4W: who, when, where, and what of actions and behavior) and core information related to the robot (4W1H: who, when, where, what (its capabilities are), and how the robot is behaving). A network robot platform that incorporates this technology makes it possible to integrate robots that were previously difficult to connect to each other. We conducted an experiment on connecting robots and transferring robot relationship information using four existing description methods over a standard communication protocol. 3. Novel robot collaboration service to expand human potentialAs previously described, different types of robots are being released by numerous companies, and they are starting to be experimentally used for various purposes. Furthermore, we are seeing increased sophistication and robotization of appliances and vehicles such as cleaning robots and self-driving cars, which were not developed as imitations of humans or animals. Therefore, it can be assumed that the variety of intelligent robots will only increase. As reported by robot start inc. [6], the number of communication robots in Japan will reach 2.65 million by the year 2020. In view of the fact that there are approximately 55 million households in Japan [7], this number suggests there will be one communication robot for every 20 households. This number is likely to increase, judging from the number of cleaning robots, which are only now coming into wide use. What benefits can we expect from intelligent robots with AI? Are the benefits limited to increasing labor efficiency as evidenced by industrial robots or as in the play written by Karel Čapek? The expression support of human growth is the key to new services that we are trying to achieve at NTT Service Evolution Laboratories. We are making efforts to develop a novel service that will enable robots and machines to understand the situation that surrounds us, encourage us, enhance our ability, and help us perform new actions. For instance, with regard to children, the new service will watch over a child, support the child in communicating with others, enhance the child’s learning motivation, become the child’s good friend, and last but not least, grow up with the child. With regard to the elderly, the new service will enable robots and machines to provide encouragement and support in healthcare and external activities, improve social participation, and support an independent daily life. For adults who are in their prime, the new service will enable robots and machines to help adults with their daily schedule and task management and support them in their sporting activities so that they can spend their time happily and efficiently. We aim to develop robots that will learn and mature with their human partners over an entire lifetime. However, this does not mean we must continue to use the same robot hardware forever. The robot will store information about the user’s preferences and characteristics on the cloud. It will also have a brain so that it can learn and mature with its user. The hardware of the robot may change from time to time or with a change in location. There may even be two or more devices. However, by connecting these robots and devices, we can overcome spatial and temporal distance and achieve a robot that can support human activity over his/her entire lifetime. 4. Interaction Control technologyOne of the key technologies necessary to realize the above goals is the Interaction Control technology used to connect humans and the devices that surround them. This technology will make it possible for the robot to understand a situation by combining the capabilities of various devices (e.g., voice from a microphone, facial expressions from a camera, and health data from electronic health devices) and also to deliver messages that include emotion by combining verbal and nonverbal communication methods. We have developed this interaction technology as the cloud-based Interaction Control technology called ‘R-envTM’ (Fig. 1).

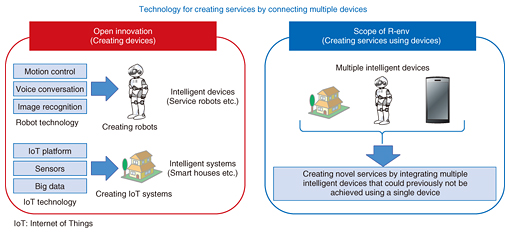

In the past, a high level of background knowledge of robot operating systems and programming languages was required in order to develop services based on robots or applications that involved the collaboration and control of multiple devices. To spread the use of robot-enabled services, we believe it is necessary to increase the number of application developers and to communicate the requirements for knowledge-processing and robot control components to facilitate the development of robot applications. To do this, we built a new technology comprising a robot services execution environment and an integrated development environment (IDE). The former environment is known as a PaaS (platform as a service), and it can permit a single system instance to support the use of multiple applications by multiple tenants (users), enable management of mapping between devices and users, and enable devices to be easily connected. The latter environment involves an IDE for software as a service (SaaS). The close cooperation between the execution environment and the development environment makes it possible for SaaS to do the following: (1) Simplify the debugging and execution of applications (2) Enable easy development of device feature management (3) Enable easy application development using only a web browser up to a certain level R-env enables anyone to easily add new devices to existing services (Fig. 2). It is based on an architecture that supports advances in interaction technology with the addition of the emotion-enhancing technology being developed at NTT [8].

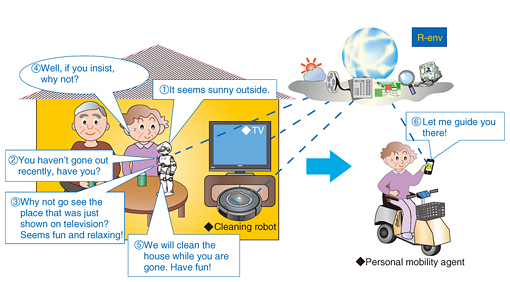

The use of R-env in our homes will make it possible to develop services that will integrate home appliances, healthcare devices, and robots with web services such as mail, schedulers, and weather forecasts. Furthermore, extending the scope of R-env outside the home will facilitate easy development of services using personal devices such as smartphones (Fig. 3).

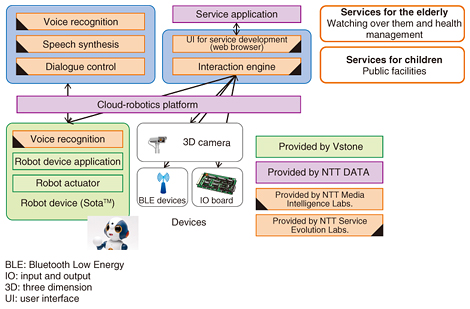

5. Use casesAn example of how the open innovation of R-env is supporting the robot vendor Vstone Co., Ltd. [9] is shown in Fig. 4. Vstone’s communication robot was made intelligent by using NTT’s voice recognition technology, dialogue control technology, speech synthesis technology, and sound-gathering technology. We are conducting experiments to confirm that the intelligent robot can, via R-env, collaborate with peripheral devices and/or other robots. For example, our intention is for robots to play an important role in supporting health management in places such as elderly housing and care facilities. The robots in these facilities will:

(1) Promote conversation and conduct health monitoring by talking with the elderly residents in collaboration with caregivers (2) Provide personally tailored encouragement based on the physical condition of each person 6. ConclusionAt NTT, by combining technology that will make devices such as robots more intelligent with technology that will enable easy device collaboration, we believe it is possible for robots to collaborate with each other via the network and to support human activities and growth in various situations. References

|

|||||||||||||||||||