|

|||

|

|

|||

|

Feature Articles: 2020 Showcase Vol. 14, No. 12, pp. 36–42, Dec. 2016. https://doi.org/10.53829/ntr201612fa6 2020 Entertainment—A New Form of Hospitality Achieved with Entertainment × ICTAbstractThis article introduces new applications of information and communication technology (ICT) in the entertainment field. Specifically, we describe two events involving the collaborative creation of new forms of kabuki using ICT: Dwango Co., Ltd.’s Cho Kabuki event held at Niconico Chokaigi (29–30 April 2016, Makuhari Messe, Chiba Prefecture, Japan) and Shochiku Company Limited’s KABUKI LION SHI-SHI-O held at the Japan KABUKI Festival (3–7 May 2016, Las Vegas, USA). Keywords: Kabuki, ICT, hospitality 1. A new way to enjoy kabukiUntil now, kabuki has mostly been enjoyed live on stage. However, NTT Service Evolution Laboratories has used information and communication technology (ICT) to create new ways to enjoy kabuki in each of the pre-performance, mid-performance, and post-performance periods. 1.1 Pre-performanceFirst, for the pre-performance period, we created an interactive exhibit called Henshin Kabuki (transformation kabuki). This non-theater kabuki performance was held at the KABUKI LION Interactive Showcase in front of a Las Vegas theater. The exhibit features the unique make-up methods characteristic of kabuki and uses the henshin (transformations) of kabuki actors to showcase the culture and technology prowess that Japan prides itself on, creating a wondrous experience. Participants chose a kumadori (stage makeup worn by kabuki actors) mask of their choice and stood in front of the large-screen monitors. The angle-free object search technology automatically detected the type of mask chosen regardless of the mask’s angle or tilt and superimposed the kumadori patterns onto the faces of the participants using augmented reality (AR) (Photo 1). The AR superimposition was made possible due to edge computing technology, which allows servers in distant locations to process images at high speed, allowing for clear, non-blurry images to be placed even if the subjects are moving around at a high speed.

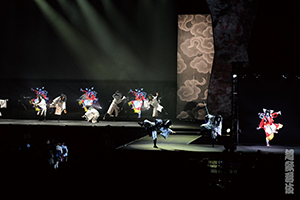

In addition, projection mapping was done on a three-dimensional (3D) face object. All dynamic performance aspects of kabuki such as timing and facial expressions were maintained and combined with the concept of an amplified experience that extracts the key performance points and makes them stand out in a larger-than-life way. Moreover, fifty kumadori masks were hung on a wall. The masks, which ordinarily should not move at all, made use of the same HenGenTou (deformation lamp) light projection technology that was used in the main performance to freely take on a diverse variety of facial expressions such as laughter and anger. Of the 1018 participants from many different age groups, nationalities, and genders, approximately 90% reacted positively to the new interactive experience. The mask-recognition technology that used angle-free object search had an accurate identification rate of 99.2%. However, some challenges for the future also became apparent such as finding a way to provide guest guidance in an exhibit that contains multiple aspects and optimizing the angle-free object search lexicon tuning. 1.2 Mid-performanceThe mid-performance period introduced the Cho Kabuki performance and a highly realistic remote rendition of KABUKI LION SHI-SHI-O (Photos 2 and 3).

1.2.1 KABUKI LION SHI-SHI-OFour initiatives were carried out to achieve the highly realistic remote rendition of the KABUKI LION SHI-SHI-O main performance held in Las Vegas. (1) Room reproduction using 4K multi-screens (Photo 4) Footage from nine 4K-resolution cameras was encoded using high-compression HEVC (High Efficiency Video Coding). MMT (MPEG* Media Transport), which enables multiple videos and voices to be flexibly synchronized in real time, was then used to create the first international 4K multi-screen relay. The Haneda (Japan) venue’s omnidirectional screens on the front stage (three 180-inch screens), stage passage (one lower screen, three rear screens), and ceiling (two upper screens) all simultaneously showed 4K video footage. The display received an energetic response from over 80% of the 198 participants that consisted of media personnel and invitees. Over 70% responded in a highly favorable manner, stating that they would like to visit again. On the system side, the synchronization and latency both produced positive results as expected, and various parameters were confirmed for future use. However, problems arose with the linkages between the multiple screens.

(2) Remote greeting using pseudo-3D images generated by Kirari! (Photo 5) Subject abstraction technology was used to finely extract live footage of the performers from their backgrounds. Synchronization of audio with the pseudo-3D video display at the remote stage enabled Somegoro Ichikawa, who was present at the live event in Las Vegas, to greet those viewing the remote stage at the Haneda venue as if he were there in person. This was a world-first for this technology. Despite a lack of prior preparation and confirmation of lighting and camera locations for the actual performance, on the system side, the subject extraction and shadow generation was done well and with high precision. Over 70% of viewers replied that the experience felt as if Somegoro Ichikawa was really standing on the stage in front of them.

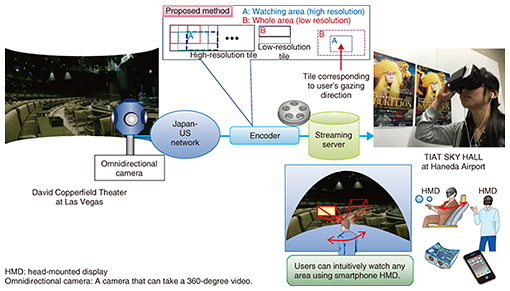

(3) 4K omnidirectional live footage broadcast for mobile devices (Photo 6) By compressing video and transmitting high-definition footage solely in the direction that the user is looking, we were able to create a system that controlled bandwidth while maintaining a high-quality viewing experience (Fig. 1). On the technical side of things, the construction and stable operation of a live transmission were achieved, which reduced bandwidth by approximately 80% when compared to transmissions of the entire 4K omnidirectional video. In addition, many users replied that they felt they were actually at the theater. We confirmed that we were able to meet the needs of omnidirectional live viewing. However, certain problems arose with image quality.

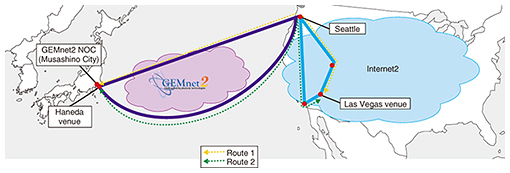

(4) HenGenTou performance (Photo 7) HenGenTou (deformation lamp) technology was used to create the appearance of a city in the middle of the ocean at the top half of the stage background (15 m high x 4.5 m wide). This technology was also used to present rippling of the water in the ocean. Several problems were discovered with the characteristics of the stage, though. For example, when spotlights were shown on actors, the stage setting and movements at the top half of the background did not stand out very much. Also, we recognized the importance of having a script in line with the performance. We constructed a video transmission network linking the Las Vegas and Haneda venues for the remote live viewing (Fig. 2). Although the period of time was limited, we were able to construct two paths, which were guaranteed for a 1-Gbit/s bandwidth, by combining a line from Internet2, the US research consortium, and GEMnet2, the network testbed owned and operated by NTT Service Evolution Laboratories. During the performance, packet losses were small enough for the error correction function to handle. Future challenges include monitoring micro-burst traffic, reducing costs, and further reducing construction times.

1.2.2 Cho KabukiThe Cho Kabuki event consisted of five performances of the kabuki play Hanakurabe Senbonzakura. Subject extraction and virtual speaker technologies were used to create highly realistic performances by extracting the figure of the samurai Sato Tadanobu (played by Shido Nakamura) and making it feel as if voices were coming directly from Hatsune Miku’s mouth. The performance received high acclaim as a new form of kabuki performance, with many mentions from television and Internet media sources. 1.3 Post-performanceFor the post-performance period, we created an experience that used a cardboard craft box combined with a smartphone to provide a simple 3D video display. Looking inside the box would reveal a palm-sized Hatsune Miku rising up in 3D fashion. This allowed users to experience the vibe of Cho Kabuki.

2. Future developmentThis project was the first step for kabuki × ICT. In preparation for the next generation of kabuki, we will pursue even more realistic forms of expression and create new kabuki space experiences (installations). We also plan to continue these stage performances and live remote performances in more trials with general users from all over the world to obtain their feedback. In addition to pursuing the development of practical-use services with business potential, we plan to take the knowledge gained from ventures in the field of entertainment and make use of it in hospitality, offering new and emotional experiences with our eyes on 2020. |

|||