|

|||||||||||||

|

|

|||||||||||||

|

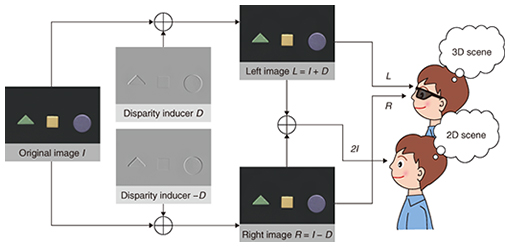

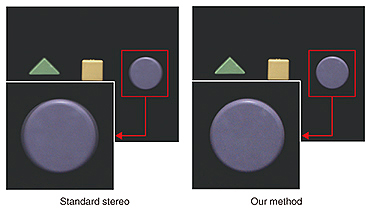

Feature Articles: Communication Science that Enables corevo®—Artificial Intelligence that Gets Closer to People Vol. 15, No. 11, pp. 29–34, Nov. 2017. https://doi.org/10.53829/ntr201711fa5 Synthesizing Ghost-free Stereoscopic Images for Viewers without 3D GlassesAbstractWhen a conventional stereoscopic display is viewed without three-dimensional (3D) glasses, image blurs, or ghosts, are visible due to the fusion of stereo image pairs. This artifact severely degrades 2D image quality, making it difficult to simultaneously present clear 2D and 3D content. To overcome this limitation, we recently proposed a method to synthesize ghost-free stereoscopic images. Our method gives binocular disparity to a 2D image and drives human binocular disparity detectors by the addition of a quadrature-phase pattern that induces spatial subband phase shifts. The disparity-inducer patterns added to the left and right images are identical except for the contrast polarity. Physical fusion of the two images cancels out the disparity-inducer components and makes only the original 2D pattern visible to viewers without glasses. Keywords: stereoscopy, spatial phase shift, backward compatible 1. IntroductionIn standard three-dimensional (3D) stereoscopic displays, a stereo pair (a pair of images each representing a view from each eye position) is simultaneously presented on the same screen. For viewers wearing 3D glasses, an image for the left eye and an image for the right eye are separately presented to the corresponding eyes, and viewers can enjoy 3D depth impressions due to binocular disparity (small differences between the image pair). For viewers who unfortunately watch the display without 3D glasses, however, the superposition of the left and right images produces uncomfortable image blurs and bleeding, which are referred to as ghosts in this article. Stereoscopic ghosts, caused by the binocular disparity between the stereo image pair, seriously limit the utility of the standard 3D display. In particular, ghosts make the display unsuitable in situations where the same screen is viewed by a heterogeneous group of people, including those who are not supplied with stereo glasses or who have poor stereopsis (the perception of depth produced by the reception in the brain of visual stimuli from both eyes in combination). 2. Stereo image synthesis to achieve perfect backward compatibilityWe recently proposed a method to synthesize completely ghost-free stereoscopic images [1]. Our technique gives binocular disparity to a 2D image by the addition of a quadrature-phase pattern that induces spatial subband phase shifts (Fig. 1). The patterns added to the left and right images, which we call disparity inducers, are identical except that they are opposite in contrast polarity. Physical fusion of the two images cancels out the disparity-inducer components and brings the image back to the original 2D pattern (Fig. 2). This is how binocular disparity is hidden from viewers without glasses but remains visible to viewers with them.

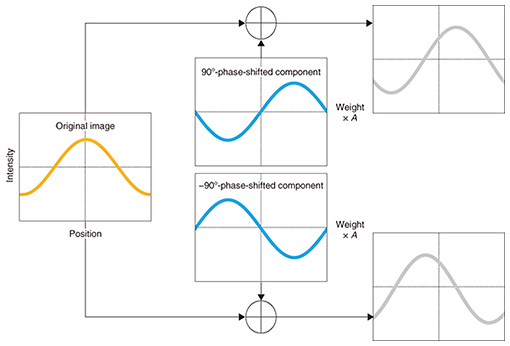

3. Technical detailsOur method synthesizes a stereo image by linearly adding a disparity inducer. To realize this process, we manipulate binocular disparity based on a local phase shift. For the sake of simplicity, let us assume a vertical grating whose intensity profile is a sinusoidal wave to be an original image. We use a quadrature (half-cycle)-phase-shifted component of the original image as a disparity inducer (Fig. 3). When the quadrature-phase-shifted component is added to the original image, the resulting composite image is a quarter-cycle-phase-shifted wave from the original one.

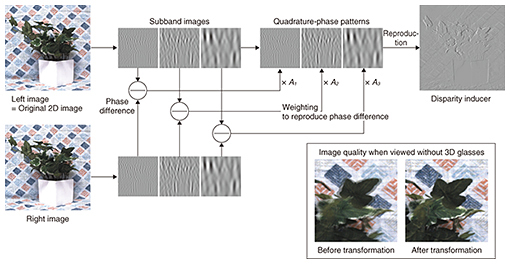

By contrast, when the quadrature-phase-shifted component is subtracted from the original image, the phase of the original wave is shifted in the opposite direction by a quarter cycle. This phase difference between the resulting images is used as a disparity for producing a depth impression. Importantly, we can control the size of the phase shift by modulating the amplitude of the quadrature-phase component. Therefore, we can reproduce the desired amount of disparity. The amount of phase shift required to reproduce a specific size of disparity depends on the spatial frequency and the orientation of the original grating. Fusion of the composite images cancels out the effects of the quadrature-phase component and makes the image profile the same as the original one. 4. Application to general imagesIn general, any arbitrary image can be represented as a combination of sinusoids by a 2D Fourier transform. Thus, the disparity manipulation described above can be easily applied to arbitrary images as long as the disparity for each sinusoidal component is spatially uniform. However, when we want to reproduce a structured map of binocular disparity (disparity map), we need spatially localized information about the spatial frequency and orientation of an input image. To analyze this local structure information, we use the steerable pyramid, which decomposes an image into subband images, each representing local responses tuned to different spatial frequencies and orientations [2]. The process to generate a disparity inducer is as follows: (1) The system first decomposes an input image into subband images using the steerable filters and obtains quadrature-phase-shifted versions of the subband images. (2) The quadrature-phase patterns are weighted so as to reproduce disparity sizes given by an input disparity map. The weight sizes are computed based on the spatial frequency and orientation of each of the quadrature-phase patterns. (3) Finally, the disparity inducer is obtained by reconstructing the weighted quadrature-phase subbands. There is a huge amount of 3D content in the conventional stereo format. We can also transform that content into our ghost-free format. The most straightforward way to achieve this is to compute a disparity map from a given stereo pair. Then, one can generate a disparity inducer in the same way as described above using one of the stereo images as an original 2D image to which a disparity inducer is added. However, we present here a more concise way, in which we compute disparity as a phase difference between a given stereo pair and calculate the weight for the quadrature-phase-shifted image directly from the phase difference. When we use the left image of an input stereo pair as an original 2D image to which the disparity is added, the process to generate a disparity inducer is as follows (Fig. 4): (1) The system first decomposes both of the input stereo images into a series of subband images using steerable filters. (2) Then we compute the phase difference between each of the corresponding subband image pairs. (3) From each of the subband images of the left image, we extract the quadrature-phase component. (4) The quadrature-phase patterns are weighted so as to reproduce the phase differences obtained in (2). (5) The disparity inducer is obtained by reconstructing the weighted quadrature-phase subbands. After the disparity inducer is given, the left and right stereo images in our stereo format are respectively generated by the addition/subtraction of the disparity inducer to/from the input left image. By processing every frame in the same manner, we can transform not only static stereo pictures, but also stereo movie sequences into our ghost-free format.

The image processing described above is designed so as to effectively drive the binocular mechanisms in our brain. The human visual system analyzes binocular disparity using a bank of multi-scale, orientation-tuned bandpass mechanisms similar to the steerable pyramid. Then the visual system computes the binocular disparity as the phase difference between the corresponding bandpass responses. Therefore, our technique can properly drive the viewer’s binocular vision by adding disparity as a spatial phase shift even though the synthesized stereo images are slightly different from those obtained in the real 3D world. 5. Comparison to previous approachA previous solution to simultaneously present 3D content and ghost-free 2D content with a single display is to make a special image combination that cancels out, say, the right image component and leaves only the left image to viewers without 3D glasses [3, 4]. However, this method significantly sacrifices the contrast of the original image. In addition, it is incompatible with the standard stereo display system. Another approach to attenuate stereo ghosts is to compress the magnitude of binocular disparity [5]. This approach is compatible with the standard stereo displays, but it cannot perfectly remove the stereo ghosts. There is also a stereo-image-synthesis method that is similar to ours [6]. In this method, an edge-enhancing (derivative) filter is used to produce pseudo-disparity. However, this method cannot precisely control the size of disparities since it does not analyze the spatial frequency or orientation of the original image. Our method can completely remove stereo ghosts while maintaining compatibility with standard stereo display hardware. Unlike the previous solution in which one of the stereo images is entirely canceled out, only the disparity inducer embedded in a stereo image pair is canceled out in our case. Thus, the reduction in the contrast of the original image is moderate or even unnecessary. Moreover, in our method, the size of the phase shift is controlled based on the spatial frequency and orientation of the original image. Therefore, we can easily reproduce natural depth structures that are indistinguishable to the physically correct stereo images. 6. Limitation and future workIn exchange for the ability to present perfect ghost-free 2D images to viewers without glasses, our method has a slightly limited ability to present 3D images compared with the conventional position-shift method. Specifically, there is an upper limit in the disparity magnitude given to the image. Adding a large disparity could affect the dynamic range and induce perceptual artifacts. Thus, when transforming standard stereo images to our ghost-free format, one may have to combine it with a depth compression technique [5]. On the other hand, our method can provide 3D information in a slightly different way from the conventional methods because we can hide disparity information in 2D images. For example, one can use 3D glasses like a loupe to inspect complicated structures of an image on a personal computer monitor while looking at it without glasses otherwise. In addition, since our technique is based on the linear addition of disparity inducers, when combined with projection mapping, it can add to real objects depth structures that are visible only to viewers with glasses. The projection system can be used, for example, in an art museum to present additional depth impressions onto existing paintings without causing any conflicts with observers who want to appreciate their original appearances. Finally, our method provides a simple way to linearly decompose a stereo image pair into a single image and a depth pattern (disparity inducer). If the disparity inducer is given, it is easy to transform any 2D content into 3D content. It remains for investigation whether this decomposition contributes to other applications such as effective image data compression. References

|

|||||||||||||