Feature Articles: Communication Science for Achieving Coexistence and Co-creation between Humans and AI

1. Introduction

In recent years, virtual reality (VR) technology has been attracting the most attention in the field of games and entertainment due to the emergence of high-performance, inexpensive devices such as head-mounted displays (HMDs). VR technology can also be used in various industries such as surgical training in the medical field, worker training at over-the-counter stores, and safety education at construction sites. However, most recent VR systems only provide visual information presentation using HMDs, which is far from our actual experience in everyday life. It is very important to integrate multimodal information such as vision, audition, touch, or a sensation of body motion to produce high-quality and realistic experiences.

It is challenging to create an artificial sensation of physical walking in VR environments. To provide a sensation of walking in unlimited VR space despite having limited actual space for walking, some techniques physically cancel out the spatial displacement caused by walking. Other techniques use a visuohaptic interaction to modify the human spatial perception. With these techniques, both the brain’s motor commands and the user’s proprioceptive information from body movements can be utilized since the users actually move their bodies in space.

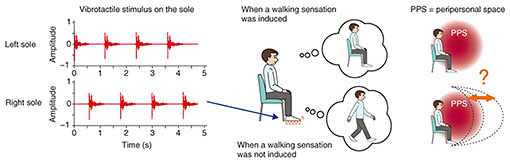

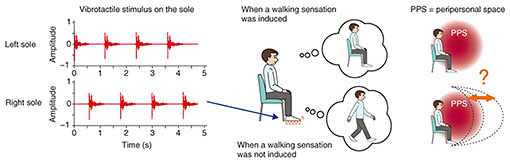

One major problem using these techniques, however, is that these systems require users to actually walk, which means that it is not possible to apply these systems to people who have difficulties walking. We therefore proposed a technique to apply multisensory cues, including proprioceptive or vestibular stimuli, to evoke a sensation of walking in seated users [1]. Our technique can be used, for example, in the living room without the subjects having to actually walk. This article introduces one of the techniques to create a pseudo-walking sensation using multisensory cues and a way to evaluate the sensation (Fig. 1).

Fig. 1. Possible relationship between vibration on the soles of the feet and the size of peripersonal space.

2. Vibrations on the soles

We found that vibrations on the soles of the feet play one of the most important roles in evoking the sensation of walking. This seems reasonable because the sole is an interface between the body and the ground, and other studies have provided evidence of the importance of identifying a floor’s materials while walking or maintaining body posture. Moreover, there seems to be a clear link between the tactile input from the soles hitting the ground and sensory feedback during walking such as the primitive reflex seen in newborns.

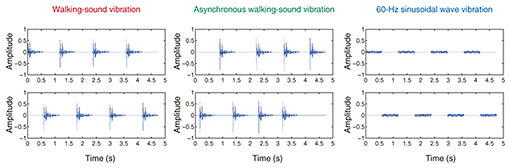

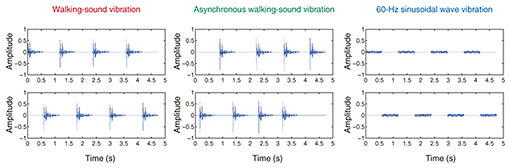

In our system, a vibration pattern consisting of a recorded footstep sound, processed through a low-pass filter at 120 Hz was presented to the heel of the foot using voice-coil vibrators (Fig. 2). We also presented an asynchronous pattern of the vibration, which was the same footstep sound, but the onset of each step was asynchronized and randomized, or we presented a pattern of 60-Hz sinusoidal wave vibrations that differed from the footsteps but was synchronized to the onset of each step. Note that the average amplitudes of the vibration patterns were set to be identical.

Fig. 2. Vibrotactile stimulus patterns presented on the soles of the feet in the user study.

3. Expansion of peripersonal space

In addition to the subjective scale, we confirmed the change in the boundary of peripersonal space (PPS) when a sensation of pseudo-walking was induced. PPS is the space immediately surrounding our body where we mediate physical or social interactions with others or the external world.

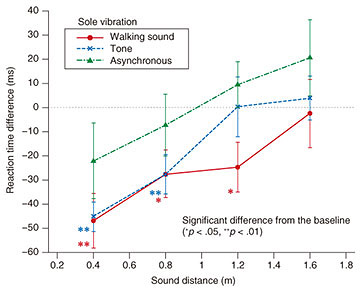

A number of studies have provided evidence of the existence of dedicated neurophysiological, perceptual, or behavioral functions in PPS. Previous studies have shown that a moving sound that gives an impression of a sound source approaching the body boosts tactile reaction times (RT) when it is presented close to the stimulated body part, that is, within and not outside the PPS [2]. Therefore, we applied the method to examine PPS boundaries by measuring the distance from the participant’s body where approaching sounds affect the tactile RT. We found that vibration on the soles evoking a walking sensation facilitates the RT to detect a tactile stimulus near the body when one is listening to approaching sounds [1].

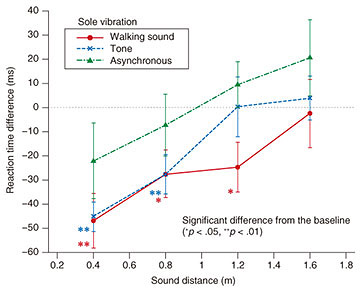

Specifically, we asked the participants to detect the vibrotactile stimulus (suprathreshold, frequency of 150 Hz) on the chest as quickly as possible. Approaching white-noise sounds were presented as a change in the sound intensity of a white noise sound source by simulating the distance from the body. The tactile stimulus was given at four different temporal delays from the sound onset to imply that tactile information on the chest was processed when the sound was perceived at four different distances from the participant.

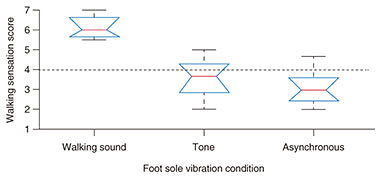

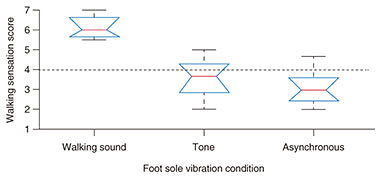

Our results showed that the vibrations on the sole boosted the processing of a vibrotactile stimulus on the chest when the position of the sound source was within a limited distance from the body. The tactile RTs in the walking-sound vibration condition were shorter than those in the asynchronous walking-sound or sinusoidal vibration conditions and in the baseline condition when the approaching sound was located at a distance of 1.2 m from the participant’s body (Fig. 3). Our finding indicates that the PPS boundaries in the walking-sound condition expanded forward compared to other vibration conditions. In addition, the walking-sound condition received the highest rating of the subjective walking-sensation (Fig. 4). We speculate that there is a synergy between the subjective ratings and the multisensory facilitation.

Fig. 3. Mean RT difference for tactile stimulus on the chest between the no-vibration baseline and sole vibration conditions as a function of sound distance from the participant.

Fig. 4. Box plots of subjective walking scores of vibrotactile stimulus on the soles.

4. Toward a higher realistic sensation

At the NTT Communication Science Laboratories’ Open House 2019 held in May 2019, we conducted a demonstration in which subjects sat in a motorized chair, and vibrations were conveyed to the soles of their feet to create a very realistic sensation of pseudo-walking (Fig. 5). We received feedback from a number of participants who said that they felt a clear walking sensation.

Fig. 5. Pseudo-walking sensation created using a motorized chair and vibration shoes.

5. Conclusion and future work

To the best of our knowledge, this is the first demonstration aside from those done in previous studies by our group to create a sensation of walking using multisensory stimulation without any actual body action. Such research on human characteristics is ongoing at NTT Communication Science Laboratories as part of efforts to investigate various issues [3–5]. This kind of technology will open the door to providing rich VR experiences, which is a relatively new field.

The characteristics of human perception have been extensively investigated in developing conventional video and audio systems. In the future, various kinds of sensory information will be considered in order to develop sophisticated interactive systems. Thus, we will continue to focus not only on understanding the mechanisms of sensorimotor processing in the brain, but also on finding prerequisites for developing interactive and natural user-friendly interfaces.

Acknowledgment

This research was conducted in part with Tokyo Metropolitan University and Toyohashi University of Technology. This research was supported by a JSPS (Japan Society for the Promotion of Science) Grant-in-Aid for Scientific Research (B) #18H03283 (Principal Investigator: Tomohiro Amemiya).

References

| [1] | T. Amemiya, Y. Ikei, and M. Kitazaki, “Remapping Peripersonal Space by Using Foot-sole Vibrations Without Any Body Movement,” Psychol. Sci., Vol. 30, No. 10, pp. 1522–1532, 2019. |

|---|

| [2] | J.-P. Noel, P. Grivaz, P. Marmaroli, H. Lissek, O. Blanke, and A. Serino, “Full Body Action Remapping of Peripersonal Space: The Case of Walking,” Neuropsychologia, Vol. 70, pp. 375–384, 2015. |

|---|

| [3] | T. Amemiya, “Perceptual Illusions for Multisensory Displays,” Proc. of the 22nd International Display Workshops, Vol. 22, pp. 1276–1279, Otsu, Japan, Dec. 2015. |

|---|

| [4] | T. Amemiya, S. Takamuku, S. Ito, and H. Gomi, “Buru-Navi3 Gives You a Feeling of Being Pulled,” NTT Technical Review, Vol. 12, No. 11, 2014.

https://www.ntt-review.jp/archive/ntttechnical.php?contents=ntr201411fa4.html |

|---|

| [5] | T. Amemiya, “Haptic Interface Technologies Using Perceptual Illusions,” Proc. of 20th International Conference on Human-Computer Interaction (HCI International 2018), pp .168–174, Las Vegas, NV, USA, July 2018. |

|---|

|

- Tomohiro Amemiya

- Senior Research Scientist, Human Information Science Laboratory, NTT Communication Science Laboratories.*

He received a B.S. and M.S. in mechano-informatics from the University of Tokyo in 2002 and 2004, and a Ph.D. in information science and technology (biomedical information science) from Osaka University in 2008. He was a research scientist at NTT Communication Science Laboratories from 2004 to 2015 and a distinguished researcher from 2015 to 2019. Since 2019, He has been an associate professor in the Graduate School of Information Science and Technology, the University of Tokyo. He was concurrently an honorary research associate at the Institute of Cognitive Neuroscience, University College London (UCL), UK, in 2014–2015. His research interests include haptic perception, tactile neural systems, wearable interfaces, and assistive technologies. He is a director of the Virtual Reality Society of Japan and the Human Interface Society. He has received several academic awards including the Grand Prix du Jury at the Laval Virtual International Awards (2007) and the Best Demonstration Award (Eurohaptics 2014).

*Current affiliation and position: Associate Professor, the University of Tokyo

|