|

|||||

|

|

|||||

|

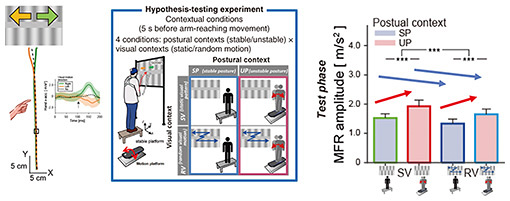

Front-line Researchers Vol. 22, No. 1, pp. 1–6, Jan. 2024. https://doi.org/10.53829/ntr202401fr1  Elucidating the Relationship between Implicit Quick Manual Reactions and Mechanisms of Sensory-motor Information Processing in the BrainAbstractMany of our daily movements are skillfully controlled by the involvement of the unconscious information-processing mechanisms in the central nervous system, such as stretch reflex. Although we may think that such an unconscious sensory-motor system is governed by a relatively primitive nervous system, some reflexive responses are generated by signals that undergo high-level visual information processing. Hiroaki Gomi, a senior distinguished researcher at NTT Communication Science Laboratories, was the first in the world to reveal the hidden mechanism of quick manual responses generated by a background visual motion, called manual following response. We asked him about the implicit reflexive manual reaction and the sensory-motor information processing in the brain, as well as his mindset and ideas about enjoying research. Keywords: sensory-motor information processing, reflexive motor response, visual motion Elucidating sensory-motor information processing in the brain for a new idea of novel user interfaces—Could you tell us about your current research? One of my research aims is to build a foundation for creating human-friendly user interfaces through the discovery of new latent sensory-motor systems and the elucidation and modeling of information processing of such systems. In a previous interview (February 2021 issue), I discussed that my research colleagues and I have revealed that one of the unconscious body and limb movements, the stretch reflex, in which motor commands are generated by the passive stretching of muscles, is regulated not only by proprioceptive information but also body-state representations in the brain (i.e., the “body image” in the brain) obtained by integrating multiple sensory information, including vision. As an example application of somatosensory perception, I introduced a small device called “Buru-Navi,” which can be used to navigate the user by stimulating the tactile sense of the finger, one of the somatosensory senses, with a particular vibration that gives the user the sense of being pulled. I’m currently working on clarifying the mechanism of information processing in the brain when a person reaches their hand for an object. I have been investigating this topic for quite some time. How does the brain use information from vision, touch, and sensors embedded in muscles to represent the external world and move the musculoskeletal system? When sensory information is slightly manipulated, mysterious phenomena on perception and movement occur, which is sometimes called “illusion.” These phenomena can occur both consciously and unconsciously. An example of such phenomena is while moving your hand, if a black-and-white pattern placed in front of your eyes is moved to the left, your hand will move to the left involuntarily, and if the pattern is moved to the right, your hand will move to the right involuntarily. This phenomenon is considered to be a reflexive response triggered by visual motion, called the manual following response (MFR), by which your hand involuntarily moves according to visual information instead of your own intention. About 20 years ago, we discovered this phenomenon and have been investigating how this information processing is executed in the brain. MFR has been generally explained by two alternative hypotheses. One proposed by another research group is that the representation in the brain of the reach target is shifted by visual motion, and that shift results in the correction of hand movements. Our hypothesis is that the large field visual motion creates the illusion that the body is moved, and the hand moves as a compensatory action. We have recently succeeded in providing experimental evidence of our hypothesis [1]. In this hypothesis-testing experiment, we compared the effects of the following four conditions on the magnitude of the hand response generated by visual motion (MFR): participants stand on a stable platform (first postural context) while a grating pattern projected on the screen in front of them is either stationary or moved randomly (visual context), and participants stand on an unstable platform (second postural context) while the grating pattern is either stationary or moved randomly (visual context). By calculating the amplitude modulation of MFR on the basis of our hypothesis by using a Bayesian model that estimates postural changes from visual motion, we predicted that MFR will be larger when the participant’s posture is unstable and smaller when the visual field is unstable. The actual measurements of this experiment were consistent with the predictions using the Bayesian model and confirmed our hypothesis that MFR is a compensating action for posture change (Fig. 1).

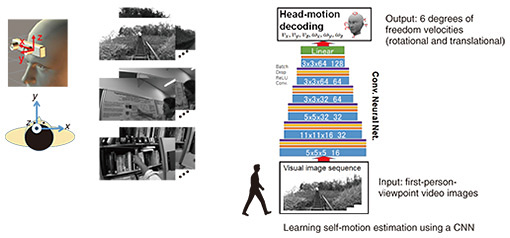

By linking the finding that MFR is a compensatory action for postural change demonstrated in the above-described experiment and the finding that visual-motion analysis in the brain is involved in MFR generation revealed in previous studies, we formulated the hypothesis that visual-motion analysis for MFR is formed to estimate self-motion (postural movement) and synthetically tested this hypothesis. In this hypothesis testing, we first used a head-mounted camera with a built-in motion sensor to capture 30- to 70-s, first-person-viewpoint video images of 30 scenes, such as indoor and outdoor walking, looking at a poster on the wall, and reaching for a book in a bookshelf. We then used a convolutional neural network (CNN) to estimate the camera motion (i.e., self-motion) with the images as input (Fig. 2).

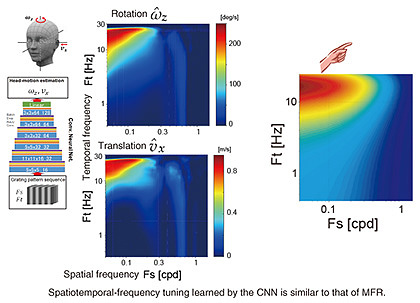

When the untrained first-person-viewpoint video was input into the trained CNN, we found that the output was almost identical to the measured values of self-motion, which indicates that self-motion consisting of rotational and translational velocities in six degrees of freedom can be inversely estimated from the first-person-viewpoint video taken during self-motion. Interestingly, when we investigated the properties of the middle layer of the CNN, we found that the CNN has information-processing characteristics similar to those of the cells responsible for visual-analysis processing in the brain, which have been previously identified. We also investigated the spatiotemporal frequency tuning of the trained CNN and found that the tuning was similar to that of the MFR. Together with further analyses, this result supports our hypothesis that visual-motion analysis for MFR is formed to estimate self-motion (Fig. 3). I believe that the progress of these studies has deepened our understanding of the mechanisms that generate latent motor control using visual information. These results were published in a paper in “Neural Network” in 2023 [2].

—You have come up with an interesting approach using Buru-Navi, right? Regarding Buru-Navi, we have been investigating how the brain perceives vibrotactile information and creates the sensation of being pulled. The results of measuring the electroencephalograms during the sensation of being pulled by changing the vibration direction of Buru-Navi revealed that the information that creates the sensation of being pulled is coded around the parietal lobe. On the application side, we had a chance to try Buru-Navi for guiding a visually impaired person to a seat in a stadium. This trial allowed us to identify technical problems of a pedestrian-navigation system with Buru-Navi. I’d like to further advance our research and development to make Buru-Navi useful in the future. —The understanding of the information-processing mechanism in the brain opens up a variety of possibilities. What are some of the challenges that lie ahead? For the time being, I think it is important to further clarify the information processing between vision and motor commands in relation to MFR. The metaverse (virtual space) has been attracting much attention, and virtual interaction and communication within the metaverse is becoming more and more realistic. I believe that understanding the brain’s information processing is one of the most-important aspects to consider in regard to interactions in the metaverse. In the current metaverse, most people interact with avatars by moving them by cursor or mouse and communicate with them using video and voice. As devices such as head-mounted displays become more advanced, it will be possible to give the sensation of one being and moving in the metaverse. As that sense of immersion and presence increases, it will be necessary to understand the mechanism of information processing in the brain and consider interface devices and safety measures on the basis of that understanding. Understanding the information processing and sensory-motor system in the brain will also become significant in using digital twins, but we are not talking about achieving that level immediately. I think it would be difficult to create a digital twin if we started working on it after the human brain is fully understood, and it would not become a reality no matter how many years we spent on it. In that sense, I think it is important to incorporate the elements of the information-processing mechanism in the brain partially as it is understood. In the meantime, I also believe that we, basic researchers, need to accelerate our efforts to clarify brain information processing related to problem settings in the real world. Let’s enjoy doing research—What do you keep in mind as a researcher? I always try to enjoy doing research. When I develop a hypothesis, I talk with my fellow researchers about what will happen if we conduct an experiment to test the hypothesis, and sometimes there are disagreements. I’m relieved if my hypothesis is correct, but when it is not, it is important to think about the reasons and logic behind the failure, and I find this thought process interesting. To enjoy my research, I try to formulate hypotheses that can be verified in a relatively short time and try to enjoy the process of verifying those hypotheses by thinking of it as a kind of game. In basic research, few research results can lead to business in the short term. Therefore, when considering a “large” hypothesis, I try to break down it into a set of “small” hypotheses that can be verified step by step. Even if the small hypothesis is experimentally shown to be wrong, I rethink my strategy for the next game—I review the logic of my hypothesis and devise a new hypothesis—and repeating this cycle of hypothesis and thinking leads to results. As I pursue my research with this approach, it is crucial to monitor my position by always keeping an eye on the world’s research. It is important to make hypotheses and models and design experiments while assessing the research of opposing and supportive groups and advancing our own research. It is necessary to think carefully without pandering to all of opinions, and new ideas, approaches, and experimental methods will emerge in the process of deliberation. If you look at your ideas and experimental results in the context of those from other research groups, you will not lose sight of where you stand. In that sense, a conference is a good place to present your research results and the thinking behind them and gain a great deal from the reactions of the participants. Although face-to-face conferences were suspended during the COVID-19 pandemic, they have recently resumed, and it is again possible to communicate with people face-to-face just by being in the conference venue. Even if you are just chatting, you may get unexpected hints. Collaboration and communication with people in different fields will also broaden your knowledge and lead you to discover new directions. I’ve been focusing on information processing from somatic sensations to motions for a long time, but I have recently become interested in the study of visual information processing from the viewpoint of the motor processing since I have been able to think about it in a new way by attending presentations by researchers in vision science at conferences and exchanging opinions with them. In that sense, I think attending conferences is very useful. —What is your message to younger researchers? Let’s just enjoy doing research. Enjoying something does not mean taking it easy. To enjoy research, you need hard work, effort, and ingenuity to make it enjoyable. During the course of your research, sometimes you have to deal with seemingly useless matters, and other times you will be troubled by a lack of results. The hardships and efforts required to overcome them can be turned into enjoyment with just one way of thinking. If too many things in a different field are unknown to you, you may not be interested in that field and just do what you are instructed from senior researchers or supervisors. It is necessary to pause and look around if you are not getting results instead of focusing only on the difficulties in front of you. I think it is fun to actively seek to know what you don’t know, understand what you don’t know, and be able to do things you didn’t think you could do before. I hope that with that mindset, you will change your perspective and enjoy working on problems. Such efforts and ingenuity may lead to discoveries and new creations. You will be the first person in the world to arrive at the results of your research, and I think that realization is the real thrill of research and the most enjoyable part of it. The more effort and hard work you put in, the stronger this feeling will become. To enjoy doing research, however, it is also important to be able to distinguish between on- and off-duty work. You need to take a good rest when you get tired of your research. Perhaps a good idea will suddenly come to you while you are relaxing on holiday, even when you have thought it through and cannot find a solution at work. I have had such experiences myself. Collaborating with others is also crucial. It is impossible for a single researcher to complete all of their own research, which is why it is necessary to communicate and collaborate with other researchers. Researchers who have a slightly different viewpoint from your own or have a different specialty may be suitable as collaborative researchers. Conflicting arguments are needed to improve the quality of research. It is not effective to form a clique only with people who agree with you. If you talk with them with the mindset that learning something you don’t know is interesting and enjoyable, you can expand the scope of your research and enjoy doing research and having discussions. References

■Interviewee profileHiroaki Gomi received a B.E., M.E., and Ph.D. in mechanical engineering from Waseda University, Tokyo, in 1986, 1988, and 1994. He was involved in biological motor control research at ATR (Advanced Telecommunication Research Labs., Kyoto) from 1989 to 1994, where he developed computational models of human motor control, robot learning mechanisms (demonstration learning), and a manipulandum system for investigating human arm movement. He was an adjunct lecturer at Waseda University (1995–2001) and adjunct associate professor (2000–2003) and adjunct professor (2003–2004) at Tokyo Institute of Technology. He was also involved in the CREST (1996–2003, 2010–2015) and ERATO (2005–2010) projects of Japan Science and Technology Agency, and the Correspondence and Fusion of Artificial Intelligence and Brain Science Project (2016–2020). He served as a committee member of the neuro-computing technical group of the Institute of Electronics, Information and Communication Engineers (IEICE) (1997–2000), its vice chair (2006), and chair (2007), committee member of the Japanese Neural Network Society (JNNS) (2012–2018, 2020–2024), president of JNNS (2021–2023), and chair of the Brain and Mind Mechanism workshop (2015–2020). His current research interests include the computational and neural mechanisms of implicit human sensorimotor control and interaction among sensory, motor, and perception, and the development of tactile interfaces. He is an IEICE fellow and member of the Society for Neuroscience, the Society for the Neural Control of Movement, the Japan Neuroscience Society, Japanese Neural Network Society, and the Society of Instrument and Control Engineers. |

|||||