|

|||||

|

|

|||||

|

Feature Articles: Research and Development of Technology for Nurturing True Humanity Vol. 22, No. 4, pp. 24–28, Apr. 2024. https://doi.org/10.53829/ntr202404fa2 Toward Enabling Communication Connecting Mind and Mind, Body and Body, and Mind and BodyAbstractTo advance research on more intuitive interfaces that connect people to people and people to machines and to achieve interfaces that are user-friendly for everyone regardless of age or disability, we focus on researching neurotechnology using brainwaves and cybernetics using techniques such as electromyostimulation. In this article, we introduce our latest research. Keywords: neurotech, cybernetics, human interface 1. IntroductionNTT Human Informatics Laboratories has long been actively researching ways to facilitate smooth and rich communication, and concepts such as interfaces using bio-signals, enabling computer operations based on mental imagery, have long existed. There has recently been significant progress in miniaturizing and improving the precision of devices that acquire vital information such as brainwaves and electromyography and that generate movement by directly electrically stimulating muscles. Therefore, the results of our research are becoming possible to introduce into society. On the basis of the evolution of such devices, we at NTT Human Informatics Laboratories are researching neurotechnology and cybernetics. In this article, we highlight recent research achievements, including:

2. Sensibility-analysis technology based on similarity of brain representationsWe aim to enable mind-to-mind communication beyond language, behavior, and cultural norms. Our research focuses on developing brain-representation visualization technology, which interprets and expresses various types of brain information (i.e., brain representations) such as emotions and cognitive states extracted from brain activities. We have proposed several interfaces, including a system that takes inputs from brain activities and converts them into aura effects associated with avatars in the metaverse. These interfaces enable a direct and visual understanding of brain representations. As part of these efforts, we developed a sensibility-analysis technology based on similarity of brain representations that reveals personal sensibilities and differences in perception between individuals. This technology sequentially presents images to the user wearing an electroencephalogram (EEG) headset. It then conducts similarity analysis of brain activities on the basis of the EEG data recorded when the user views each image. By using the analysis results, a sensibility map is created, where elements are arranged linearly with similar items placed close together and dissimilar ones farther apart (see Fig. 1). The sensibility map visually illustrates users’ subjective interpretations of styles and features of the image content. For example, it conveys ideas such as “Images located near each other have a similar impression” or “Images separated by only one image evoke a unique feeling.”

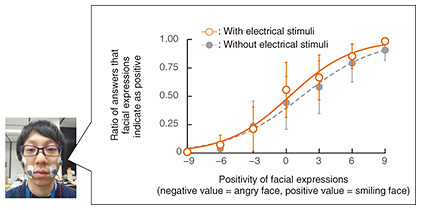

On the sensibility map, elements with particularly high similarity are drawn to be included in a single group, and their overlap and inclusion relationships are represented as a Venn diagram. Furthermore, labels representing each group are generated from the captions of included images. These labels linguistically describe the user’s perspective when viewing these images. As an application to creative activities and capabilities (creativity), we implemented a function that takes multiple images on the sensibility map as input and combines their semantic features to generate a new image. With the advancement of generative artificial intelligence (AI), non-professionals can now achieve high-quality expressions. However, it is challenging for the average user to vividly imagine what they want to express then transcribe it into an instruction text (prompt). By understanding the user’s sensibility and generating images through EEG analysis and visualization, it will become possible to directly incorporate the user’s sensibility into generative AI, improving output. We conducted multiple evaluation experiments on several categories of images such as paintings and label designs. Regarding the generation of landscape paintings, this technology using brainwave analysis outperformed conventional prompt-based inputs. Users said that they felt they could express what they wanted to express, showing significantly higher evaluation scores in terms of expression accuracy, sense of identity, and preference for generation results. This superiority can be attributed to the difficulty in linguistically describing characteristics and impressions of landscapes painted in artistic styles such as realism and impressionism. When comparing the results of similarity analysis of brain activities with the data from subjective evaluations (adjectives of impressions received from images), we observed considerable variation among individuals in the items that showed correlations. However, certain items showed consistent correlations across individuals, including perceptual expressions (e.g., “bright” and “hard”) and subjective evaluations (e.g., “beautiful”). The results suggest the potential to extract not only aesthetic values but also individual-specific impressions from brain representations. By improving methods that use brainwave analysis for generation, our approach enables even those who are not proficient in using AI for expression to achieve their desired creative expressions. Interfaces incorporating brain information, including the sensibility-analysis technology based on similarity of brain representations introduced in this article, are anticipated to enhance people’s creativity and expressive desires, contributing to an information infrastructure that will foster richer communication. We will continue our research and development efforts to create a society where individuals with diverse characteristics share sensibilities, fostering mutual growth and creativity with AI. 3. Facial-expression-recognition tendency-modification approachWe aim to support smooth communication by modifying the recognition tendencies of facial expressions through providing subtle stimuli. As there are individual differences in how people interpret emotions from others’ facial expressions, these differences can sometimes lead to communication discrepancies. Therefore, we explore methods of transforming facial-expression-recognition tendencies and promote desirable perceptions. Our approach focuses on the facial feedback hypothesis, which suggests that the movement of facial muscles creating expressions can affect a person’s emotions through feedback to the brain [1]. Previous studies related to this hypothesis suggested that moving facial muscles affects the elicitation and recognition of corresponding emotions [2]. For example, moving the zygomatic major muscle in the cheek can induce positive emotions such as happiness, making it easier to perceive others’ expressions as positive. Building upon this phenomenon, we hypothesized that applying subtle stimuli to facial muscles could induce facial feedback effects and modify facial-expression-recognition tendencies. We conducted experiments to investigate the modification of facial-expression-recognition tendencies when providing subthreshold electrical stimuli to facial muscles. Participants were presented with facial expressions displaying various intensities of anger or laughter. They judged whether the facial expression was positive or negative, and the results were compared between conditions with and without electrical stimuli. The results suggested that when electrical stimuli were applied to the facial muscles in the cheeks, participants were more likely to judge facial expressions as positive compared with the condition without electrical stimuli (see Fig. 2).

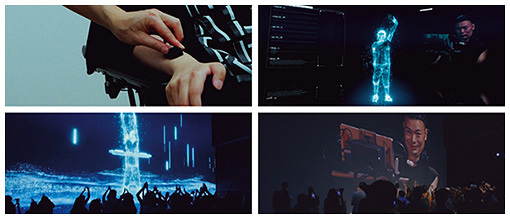

We aim to further understand the relationship between the type and location of stimuli and the modification of facial-expression recognition. We will also attempt to explore and identify more effective stimuli. 4. Motor-skill-transfer technologyFor many years, we have been advancing research in motor-skill-transfer technology, using devices such as electromyography sensors to measure and record the movements of human muscles and transfer movements to oneself and others using myoelectrical stimulation. Through experiments, we demonstrated the effectiveness of sensing and transcribing skilled practitioners’ movements to beginners, for example, allowing individuals to efficiently perform correct movements, particularly in activities that are challenging to master, such as musical instrument playing or sports. This technology has potential applications in situations where age or disabilities hinder individuals from moving as intended. By transcribing the movements of healthy individuals, including their past selves, those facing challenges in movement due to aging or physical impairments may regain motor skills. Our research has expanded beyond muscle movement transcription to include movement control based on brainwaves and avatar control within virtual reality environments such as the metaverse. In the field of movement control based on brainwaves, we developed the Neuro-Motor-Simulator, a model that captures the intricate mechanism of muscles moving and actions being performed on the basis of brain commands. Our aim is to create a technology that accurately reproduces movements from brainwave signals. This technology holds the potential to evolve into an artificial spine for individuals with spinal cord injuries, allowing them to regain motor capabilities. We are also exploring the control of avatars in metaverse spaces by sensing brainwaves and electromyography. Collaborating with a DJ and artist who has amyotrophic lateral sclerosis (ALS), a condition in which muscle movement gradually becomes difficult, we conducted experiments to control avatars in metaverse space by sensing electromyography from minimally responsive muscles. This research involved attempting live performances within the metaverse (see Fig. 3).

5. Future initiativesAt NTT Human Informatics Laboratories, we will accelerate the advancement of various areas of neurotechnology and cybernetics research beyond the technologies introduced in this article. Our goal is to create new communication technologies that directly connect minds and minds, bodies and bodies, and minds and bodies. Through these technologies, we aim to build a world where people can understand each other regardless of gender, age, culture, interests, etc. We also strive to create a world where anyone, regardless of age or disability, can move their body as envisioned. References

|

|||||