|

|||||||||||||||||

|

|

|||||||||||||||||

|

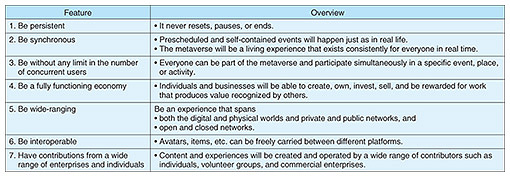

Feature Articles: Research and Development of Technology for Nurturing True Humanity Vol. 22, No. 4, pp. 29–36, Apr. 2024. https://doi.org/10.53829/ntr202404fa3 Project Metaverse: Creating a Well-being Society through Real and Cyber FusionAbstractNTT Human Informatics Laboratories is researching and developing the “metaverse of the IOWN era” in which the real and cyber worlds converge on the Innovative Optical and Wireless Network (IOWN), NTT’s next-generation communication infrastructure featuring ultra-high capacity, ultra-low latency, and ultra-low power consumption. This article introduces the latest research and development initiatives at NTT Human Informatics Laboratories, which are divided into space and humans (avatars), the main components of this metaverse. Keywords: metaverse, Another Me, XR 1. BackgroundThe word “metaverse” that appears in the title of this article originally appeared in the science-fiction novel Snow Crash published in 1992 as the name of a fictitious communication service in virtual space. It went on to become a commonly used noun as a variety of virtual-space services made their appearance with advances in technology. Today, it is a word that generally refers to virtual-space services on the Internet in which people can interact with each other via their alter egos called “avatars.” Metaverse-like virtual-space services have been quite diverse, beginning with chat services offering interaction via two-dimensional (2D) virtual space such as Fujitsu Habitat constructed in 1990 and extending to services such as Second Life (Linden Lab) that include sales of land and items and close-to-reality economic activities through currency in virtual space. More recently, they have come to include multiplayer online games such as Fortnite (Epic Games) and Animal Crossing: New Horizons (Nintendo) as well as business-oriented Horizon Workrooms (Meta Platforms) and Mesh for Microsoft Teams (Microsoft). The metaverse concept and metaverse-like services have existed for some time, but there is still no uniform definition. To date, leaders of big tech companies and experts have offered a number of definitions and opinions, but after the American venture capitalist Matthew Ball compiled and presented the core attributes of the metaverse and features that it should provide in 2020, a single set of guidelines began to take shape [1, 2] (Table 1).

It has been pointed out that “several decades will be needed to build an infrastructure along with an investment of several tens of billions of dollars” before an extensive metaverse equipped with these attributes and features can be realized. Nevertheless, implementation of the metaverse in society is progressing as reflected in (1) the evolution and reduced cost of technologies related to virtual reality (VR), augmented reality (AR), and cross reality (XR), the core technologies of a metaverse, and (2) growing expectations of virtual space even closer to reality due to the enhanced and enriching means of communication and diverse experiences that became possible during the recent COVID-19 pandemic. 2. Project MetaverseAt NTT Human Informatics Laboratories, we aim for a society in which everyone is in a state of well-being. Our mission is to “research and develop technologies that nurture true humanity” and our vision is to “enable information-and-communication processing of diverse human functions based on a human-centric principle.” One of our initiatives toward making this vision a reality is Project Metaverse. As touched upon above, the main objective of most metaverse-like services had been to provide experiences different than those of the real world. It has therefore been difficult to directly apply experiences on a metaverse to the real world. While a variety of metaverses have come to provide services, the number of metaverses that can be used by a single user is limited due to physical constraints, which makes it difficult to enjoy a variety of experiences. Against the above background, we would like to provide users with a wide array of experiences equivalent to those of the real world on multiple metaverses by achieving (1) ultra-real virtual space and cyber/real intersecting space and (2) avatars with identity and autonomy. Therefore, it should be possible to overcome not only space-time and physical constraints but also cyber/real barriers while increasing the opportunities of encountering the values of others. These achievements should lead to a state of well-being not just on an individual level but also for society as a whole. In this article, we introduce specific Project Metaverse activities and related technologies from the following two perspectives:

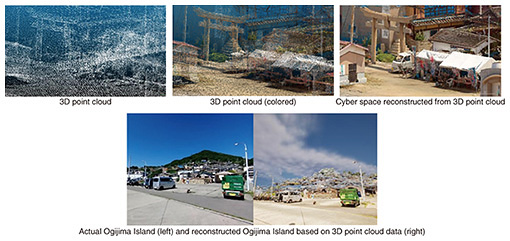

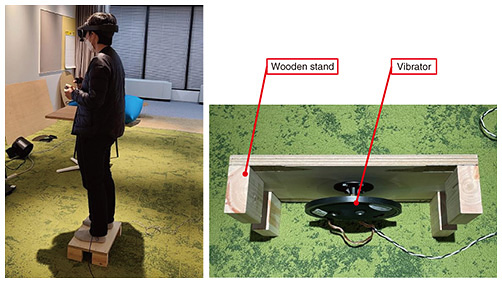

3. Spatial representation and spatial fusion3.1 Spatial representation: constructing virtual space sensed with all five sensesEfforts at reproducing actual buildings and structures, historic sites, and landscapes in virtual space that can be experienced as a real metaverse have thus far been centered on tourism mainly with regional development and revitalization in mind. With these endeavors, a variety of methods have been considered from the research level to commercial services. However, it can be said that disparity between the real and cyber worlds with regard to a sense of presence is a common problem hindering a state of enjoyment and immersion in such a metaverse. In particular, in an experience such as enjoying scenery and nature while moving through a broad area, it would be costly to reproduce such a visually detailed, extensive space. It would also be difficult to reproduce the experiences and bodily sensations that would occur when actually visiting that location solely on the basis of visual information. For these reasons, it has thus far been difficult to provide a high sense of presence. In light of the above, we undertook the construction of a virtual space that can be sensed with all five senses targeting Ogijima Island, a small island in the center of Japan’s Seto Inland Sea [3]. In this project, we constructed a visually detailed, extensive virtual space while keeping costs down as much as possible by adopting (1) point cloud data obtained from a sensor technology called light detection and ranging (LiDAR) that can extensively and easily measure and grasp actual space and 3D point cloud media processing technology for analyzing and integrating that data, both of which are also used in self-driving systems, and (2) photogrammetry technology for creating realistic three-dimensional computer graphics (3DCG) from multiple photographs taken from a variety of angles (Fig. 1). In combination with the above, we used stereophonic acoustics technology and a newly developed walking-sensation presentation technique based on sounds and vibrations (Fig. 2) to faithfully reproduce and integrate sensory information “at that location” to stimulate other senses such as hearing and touch in addition to sight. Thus, we made it possible to provide users with the sensation of “actually being there” via the five senses. We consider that making experiences in virtual space equivalent to those in the real world as described above will make it possible to seamlessly use those experiences in the real world.

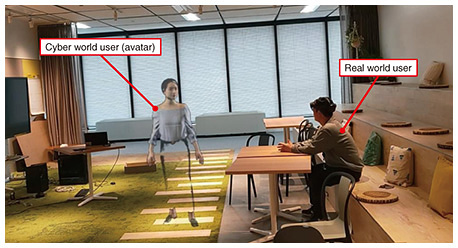

3.2 Spatial fusion: constructing intersecting space spanning two worldsAn avatar that acts as one’s alter ego in a metaverse generally exists within a closed virtual space, and the range of its communication is likewise limited to virtual space. A user in the real world is therefore unable to confirm the state of such an avatar, which may give rise to a phenomenon called “echo chamber” or “filter bubble,” which may generate division or discord between the cyber and real worlds. We have therefore been researching technologies that will enable users (avatars) active in the cyber world and those in the real world to overcome the barriers between the two worlds and communicate with each other. One of these is High-precision VPS, which draws a positional correspondence between information in the cyber world (content and avatars) and the real world. Another is Glasses-free XR AISEKI system, which enables communication with avatars and virtual characters in the cyber world without having to wear special equipment such as VR goggles. The former technology uses the same technology that has recently come to be used in AR services, but by combining virtual space finely and extensively reproduced by point cloud data, as described above, with a smartphone or AR glasses equipped with a camera, it becomes possible to position users in the cyber and real worlds correctly in mutual space and enable them to communicate freely in the same space (Fig. 3). The latter technology will achieve intersecting space, enabling users in the real world to sit next to or share a table with an avatar, etc. in the cyber world through a multilayer 3D arrangement design of a half mirror, display, and a group of physical objects such as a desk or sofa. This technology will enable natural bidirectional communication between multiple users existing in both worlds without having to wear VR goggles or other equipment.

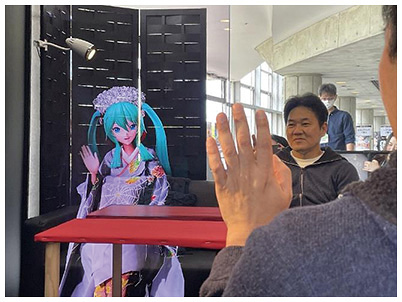

A Glasses-free XR AISEKI system was introduced at the Cho-Kabuki Powered by NTT event in April 2023 in the form of a shared-seating magic lantern tea house where conversation with virtual diva Hatsune Miku could be enjoyed while sitting alongside her in the same space. Visitors and experiencers evaluated the system highly giving comments such as “I could see Hatsune Miku in 3D!” and “Being so close to her gave me a thrill!” [4] (Fig. 4). We plan to achieve a more compact and frameless system that will make it even easier to use with a more immersive experience.

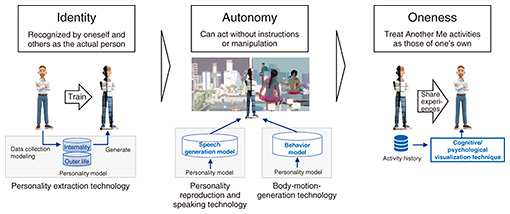

4. Another Me4.1 Identity, autonomy, and oneness of Another MeWe aim to achieve a society in which everyone can overcome constraints such as time and space and even handicaps and expand the opportunities in their lives. Another Me simultaneously possesses “identity” that reproduces not only a person’s appearance but also behavior and internality, “autonomy” whereby Another Me acts on its own in the metaverse beyond the constraints of the real world, and “oneness” in which the results of such activities are shared with the real person as an actual experience (Fig. 5). To achieve a level at which one can share their identity with their avatar to the point of feeling that the avatar is “me,” we have undertaken research even from a philosophical point of view and have found that this sense of identity includes a desire to connect with other people and society. We can envision a variety of use cases in this regard such as consulting with an avatar in place of the real person using that person’s specialized knowledge or carrying out work in collaboration with that person. On the basis of such use cases, we have been researching and developing elemental technologies for Another Me and working to implement Another Me in society using those technologies. In this article, we focus on three of these technologies—body-motion-generation technology, personality extraction technology, and personality reproduction and dialogue technology—and introduce our activities in applying them.

4.2 CONN for expressing natural body motionWe have been linking the facial expressions and full-body motion of the digital human “CONN” (Fig. 6), a virtual personality co-created by NTT Communications Corporation, NTT QONOQ, INC., and Toei Company, Ltd., with the body-motion-generation technology of Another Me and conducting experiments.

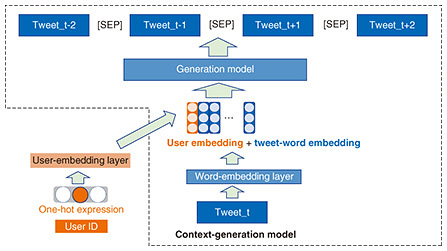

Body-motion-generation technology enables the generation of natural and human-like facial expressions and full-body motion while speaking from a small amount of data [5]. Generating a model from body motion, and the speech and its content uttered during such motion makes it possible to automatically generate body motion from uttered speech only. The model is trained to generate body motions in accordance with the meaning of the utterances made, smooth out body motions that reflect semantic coherence, and generate not only body motions that appear regularly but also those that appear in special contexts. In the digital human CONN, human-like, natural body motion has been achieved by collecting and modeling the body motions of people acting as models and the speech uttered during those motions and generating motions tailored to the utterances made by CONN. 4.3 Personality extraction and reproduction by meta-communication service MetaMe™With the aim of enabling anyone to have Another Me as one’s alter ego, NTT Human Informatics Laboratories is testing and implementing personality extraction technology and personality reproduction and dialogue technology in MetaMe™*, a meta-communication service developed by NTT DOCOMO [6]. Personality extraction technology trains a vector for each individual that embeds information from that person’s behavior log concerning personality and values that affect behavior [7] (Fig. 7). This technology enables behavior in tune with that person’s values to be reproduced in Another Me. Therefore, similarity between values could be compared to find people with similar viewpoints or create a group with a diversity of viewpoints.

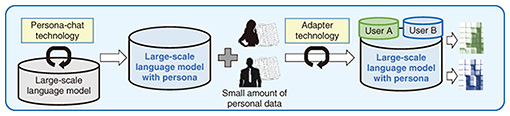

Personality reproduction in dialogue technology reproduces dialogue of a specific individual from a small amount of training data. This technology achieves this by combining persona-chat technology that reproduces dialogue according to the user’s profile and adapter technology that learns personal features (tone, phrasing, etc.) from a small amount of dialogue data (Fig. 8).

Using these technologies, we developed a prototype of a non-player character (NPC) as an alter ego. An alter ego NPC communicates beforehand with another user on behalf of oneself so that communication between those two users becomes activated when they actually come to meet on MetaMe™, which can lead to the creation of new encounters or other opportunities. We are currently conducting a proof-of-concept experiment with NTT DOCOMO to see whether new social connections can be established between users.

5. ConclusionAs described in this article, most metaverse-like services provided thus far have been confined to virtual spaces with the result that the experiences provided have been different from those of the real world. However, as touched upon in Matthew Ball’s “Seven Features that the Metaverse Should Have,” user experiences in the metaverse of the future will span both the real and cyber worlds by seamlessly linking and merging these two worlds. In our Project Metaverse initiative introduced in this article, we aim to merge real-world and cyber-world experiences, enhance the “connection” between people and people and between people and society, and create an enriching and prosperous society that embraces diversity. Please look forward to future progress in these efforts. References

|

|||||||||||||||||