|

|||||||||||

|

|

|||||||||||

|

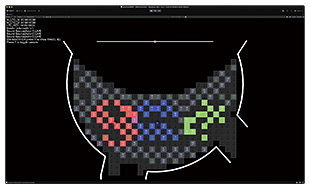

Feature Articles: NTT R&D at Expo 2025 Osaka, Kansai, Japan Vol. 23, No. 10, pp. 25–32, Oct. 2025. https://doi.org/10.53829/ntr202510fa2 IOWN × Spatial Transmission—A Communication Experience in Which Distant Spaces Become as OneAbstractWe introduce our technological efforts involved in transmitting the performance of the Japanese female group Perfume in real time over NTT’s Innovative Optical and Wireless Network (IOWN), as exhibited at the NTT Pavilion at Expo 2025 Osaka, Kansai, Japan. In this production, we connected Expo’70 Commemorative Park in Suita, Osaka and Yumeshima, Osaka (the site of Expo 2025) by IOWN and achieved an experience in which Perfume seemed to appear right before one’s eyes despite performing at a distant location through dynamic three-dimensional spatial-data transmission and reproduction technology and vibrotactile sound field presentation technology. Keywords: IOWN, 3D space transmission, Expo 2025 Osaka, Kansai, Japan 1. Background and goalExpo 2025 Osaka, Kansai, Japan (hereafter referred to as Expo 2025) was held exactly 55 years after the announcement of wireless telephones as “telephones of the future” at Japan World Exposition Osaka 1970 (Expo ’70) held in Suita, Osaka. At NTT laboratories, we aim to surpass two-dimensional (2D) communication based on video and audio by sharing entire 3D spaces at distant locations via NTT’s Innovative Optical and Wireless Network (IOWN) with low latency and to make communication that connects all of the five human senses, not just sight and hearing, feel natural. In an attempt to embody this form of future communication at Expo 2025, we conducted a trial for the first time in the world by connecting the NTT Pavilion at Yumeshima, Osaka (the site of Expo 2025) with Expo’70 Commemorative Park in Suita, Osaka over IOWN and transmitting a live performance of the popular Japanese female group Perfume. We achieved an experience in which the performers at a distant location seemed to be truly present in the same space by transmitting haptic information in addition to 3D space with latency low enough to enable bidirectional communication. In this article, we describe dynamic 3D spatial-data transmission and reproduction and vibrotactile sound field presentation—the two key technologies used in this production—and introduce our behind-the-scenes activities to show how this performance was transmitted live using these technologies. 2. Dynamic 3D spatial-data transmission and reproduction technologyDynamic 3D spatial-data transmission and reproduction technology enables the transmission of not only the geometry of space but also the dynamic changes over time, that is, spatial motion. However, several problems have arisen when attempting to transmit live performances using conventional technologies for measuring and transmitting dynamic 3D spatial data. The first problem is the difficulty of performing high-accuracy measurements over a wide area. Methods for measuring 3D data include RGB-D (Red Green Blue - Depth) cameras such as Microsoft’s Kinect devices. However, an RGB-D camera usually has a narrow measurement range of several meters, and significant distortions may occur in measurement values obtained at longer distances, especially near measurement boundaries. The second problem is the difficulty involved in achieving low latency from measurement to display. Other than RGB-D cameras, a method for making dynamic 3D measurements of a fixed area that is being rapidly commercialized is volumetric capture. This method arranges multiple cameras around an object to measure 3D data, but because processing for estimating and restoring a 3D shape from multiple camera images is required, processing time of several seconds is needed even for cases of relatively small delay. However, being able to use a bidirectional communication system such as a telephone or web conference without discomfort requires that one-way communication from measurement to presentation be completed in less than 200 ms. Volumetric capture also requires a stable environment with fixed lighting conditions such as a studio, so it would be difficult to apply it to the live performance presented in this article, which includes intense lighting effects in tune with the song or performer movements. Instead of using only cameras as in volumetric capture, dynamic 3D spatial-data transmission and reproduction technology developed by NTT laboratories measures 3D space with an original configuration of measuring equipment (Fig. 1) that combines one camera with three light detection and ranging (LiDAR)*1 sensors. This technology achieves wide-area spatial measurements by using multiple sets of the measurement equipment shown in Fig. 1 and executing time synchronization control of all camera and LiDAR devices over the network. The LiDAR sensors used for measurement are of a type used mainly in on-vehicle applications for self-driving, which makes them capable of measuring positional information of an object situated up to 200 m away. Dynamic 3D spatial-data transmission and reproduction technology also reduces processing by combining LiDAR data that can directly obtain depth values with camera image data, achieving short times from measurement to display.

With this technology, time synchronization control of camera/LiDAR devices uses the Precision Time Protocol (PTP), which synchronizes devices via the network with nanosecond-to-submicrosecond accuracy. While each LiDAR sensor can only measure space at a frequency of 10 times per second, PTP can be used to change the timing at which three LiDAR sensors measure space every 1/30 of a second, thus achieving spatial measurement at 30 times per second. The ability to match and collect multiple sets of sensor data with high accuracy on the basis of position and time is the foundation of this technology and plays an important role in sensor fusion that correctly integrates data measured using separate LiDAR sensors into one set of 3D data and that colorizes LiDAR 3D point-cloud*2 data having no color information using images obtained with another sensor, i.e., the camera. As described above, the key feature of this technology is its combination of camera and LiDAR data. However, many technical issues must nevertheless be addressed to make the technology practical. One issue is that the point-cloud data obtained from LiDAR measurements are “sparse” compared with camera images. In general, a LiDAR sensor emits laser light as a scanning line and calculates the distance to an object by the time-of-flight method that uses the time taken for the light to reflect off of the object’s surface and return. The spatial density that can be measured is therefore dependent on the number of scanning lines, and at present, the number of scanning lines is only 128 at maximum density. Considering that a camera can shoot at a granularity of 1080 pixels in the horizontal direction in the case of full high-definition quality, it can be seen that this number of scanning lines is indeed sparse. The second issue is the generation of noise and misalignments between devices when making measurements using multiple camera/LiDAR sensors. Correctly integrating data measured by multiple camera/LiDAR devices into a single set of data requires that the positional relationship among those devices be accurately obtained and that calibration be performed to precisely compensate for measurement distortion in each device. However, conducting completely error-free calibration is difficult, which makes it necessary to take countermeasures to misalignments and distortion generated by such errors and to errors that occur in colorizing point-cloud data by camera images. In dynamic 3D spatial-data transmission and reproduction technology, the problems of density and misalignment/distortion are solved using our original densification technology and noise removal technology. The densification technology predicts depth values from camera images with low latency in areas that cannot be measured with LiDAR. This has been achieved through the development of an algorithm that is 20-times faster than the existing technique. The noise removal technology involves a technique for removing afterimage noise generated by a fast-moving object, technique for removing point-cloud data erroneously colored from camera images, and filter processing to prevent an accumulation of noise and distortion from each measurement device that causes images to deteriorate when integrating 3D data measured from many angles into one set of data. As a result of these research achievements, dynamic 3D spatial-data transmission and reproduction technology can make data measurements, apply densification and noise removal, send and receive data, and put that data into a state viewable as 3D information all within 100 ms. This technology therefore exhibits high real-time characteristics greater than a one-way delay of 200 ms deemed necessary to enable bidirectional communication without discomfort. With respect to the issues of 3D-space measurement density and misalignment/distortion, the technology has achieved a level that enables viewing without discomfort with the large 3D light-emitting diode (LED) display at the NTT Pavilion.

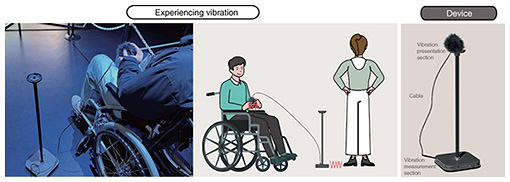

3. Vibrotactile sound field presentation technologyVibrotactile sound field presentation technology measures tactile vibrations of footsteps that vary according to the structure and shape of the ground, shoes being worn, and intensity of steps, and obtains a sense of localization as well as reproduces the above at a distant location. For example, in this live performance in which the members of Perfume wore boots on a wooden stage, this technology made it possible to transmit the texture and feel of their firm footsteps made while dancing with sharp movements so that viewers at a distant location could feel as if they were at the same stage. Measurement and reproduction that includes localization means that, when the position of a subject that generates vibration changes, viewers in the other venue can sense that change in position. For example, when a performer moves in a side-to-side manner, this technology is able to transmit that movement in a way that conveys tactile vibration in both left and right directions. In conventional technology, the technique commonly used to measure tactile vibration involves embedding an array of microphones under the floor. However, such a microphone-embedding technique requires doubling of the arena or stage flooring, installation of many microphones, wiring to collect signals, etc., thus driving up installation and construction costs. In a live performance like the one described here, floor microphones can pick up even sounds from the music being played in addition to tactile vibration, making it difficult to clearly measure only the signals desired. At NTT laboratories, we investigated these problems by developing an original compact wireless sensor (Fig. 2) and a technique that directly attaches that sensor to a performer’s shoe. This sensor measures acceleration in contrast to an ordinary microphone that measures acoustic vibrations as sound. Using an accelerometer in this way enables tactile vibrations to be measured without being affected by environmental sounds such as music accompanying a dance, which differs from the method using microphones. However, when measuring tactile vibration through acceleration, it is generally easy to lose “vibration texture,” such as the feeling of boots knocking on a hard floor, compared with microphones. This technology, however, controls emphasis and restraint by frequency when reproducing vibration, thus reproducing texture even with the use of acceleration data.

At NTT laboratories, we have also developed a new tracking system to track the locations generating vibration to reproduce vibration that includes localization. Specifically, we developed a technique that combines camera-based markerless tracking and tracking by self-emitting markers on the basis of an original design. This technique achieves a level of robustness that never loses track of the target subjects even in an environment in which measurement targets move frequently and change positions such as in this live performance. NTT’s original technology was also used to efficiently reproduce measured tactile vibrations together with positional information in a large-scale environment. To enable a maximum of 70 visitors to simultaneously experience a performance at the NTT Pavilion, the experiential space was covered with a raised free-access floor consisting of as many as 352 50 × 50-cm tiles. To make matters worse, vibrations cannot propagate across these tiles, so to enable vibrations to be experienced anywhere in the experiential space, a vibrator would have to be attached to each of these tiles. Thus, we developed an original floor design that adds a vibration propagation layer, which enabled us to reduce the number of vibrators to approximately one-third the previous number while maintaining a sense of localization in eight directions (forward and backward, left and right, and diagonally) and reproducing powerful vibrations. By using an originally developed vibration controller (Fig. 3) to control which tile vibrators to actuate depending on the positions of the measured vibration sources, it becomes possible to direct various types of vibration that express, for example, uniform shaking all over or a change in vibration location according to the positional relationship of the performers.

At NTT laboratories, we have also developed a device that enables vibrations to be felt with one’s hands to enable individuals in wheelchairs to experience vibration with ease (Fig. 4). This device has a simple structure that measures nearby floor vibrations and transmits those vibrations to a hand-held unit, which provides flexible support for experiencing vibration regardless of the user’s position.

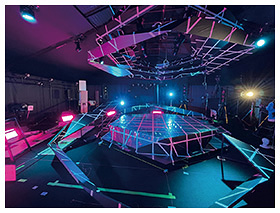

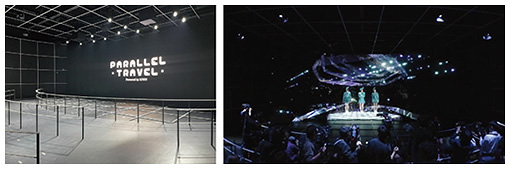

4. Behind the scenes at the trialWe held a trial in transmitting a live performance on April 2, 2025 connecting the former site of the Telecommunications Pavilion at Expo’70 Commemorative Park in Suita, Osaka and the NTT Pavilion at Expo 2025. In conjunction with the NTT Pavilion experience theme of “PARALLEL TRAVEL,” these two locations were selected as sites symbolizing the 1970 and 2025 expositions, and data transmission between them was achieved using NTT WEST’s All-Photonics Connect powered by IOWN over an interval of approximately 20 km. We constructed a stage in Expo’70 Commemorative Park to hold the performance within a 10 × 15-m tent (Fig. 5). Around this stage, we arranged 7 sets of measuring equipment to capture dynamic point-cloud space, 28 cameras to track vibration positions, and 4 3D cameras each with a resolution of 4K to capture stereo video. The voices of the three members of Perfume were picked up by pin microphones, and footstep vibrations were measured using three sets of vibrotactile sensors with one sensor attached to each boot of each member.

The total amount of dynamic point-cloud data, vibrotactile data, stereo-camera data, and voice data transmitted between these two locations over the IOWN All-Photonics Network (APN) came to 25 Gbit/s, and the amount of dynamic point-cloud data transmitted per second came to 45 million points using the data format developed by NTT laboratories. Stereo video was transmitted using the SMPTE 2110 world standard and an Internet Protocol (IP)-based 4K 60P video transmission standard, and vibrotactile data and voice data were transmitted using the Dante standard that enables IP transmission of audio signals with low latency. The above data were then received and reproduced at the NTT Pavilion in a powerfully expressive manner by displaying the dynamic point-cloud data and stereo-camera data on the 13.2 × 4.8 m (W × H) 3D Immersive LED System*3 (Fig. 6) provided by Hibino Corporation while merging it with past Perfume 3D data previously obtained by volumetric capture. Camera work, light-particle effects, and other stage directions were added in real time to the dynamic point-cloud data transmitted as described above to achieve a form of expression that makes good use of that point-cloud data. Tactile vibrations were conveyed by controlling 128 floor-embedded vibrators according to the positions of the performers who are displayed stereoscopically on the 3D LED display. This scheme achieved a live experience that combined the sense of touch with the senses of sight and hearing.

Preparations for this trial were particularly intensive from mid-March when the tent was set up in Expo’70 Commemorative Park to the time of the live performance, a period of less than three weeks. During this period, work progressed with staff divided into those on the Expo’70 Commemorative Park side and those on the Yumeshima (NTT Pavilion) side. However, remote production achieved by controlling the equipment necessary for video, audio, and lighting directions over the IOWN APN made it possible to significantly decrease the number of round trips made by those in charge between the two venues, and the work was completed in an efficient manner. This transmission trial was showcased in front of many members of the media invited to the NTT Pavilion as a one-time-only live performance. The live transmission was also delivered on YouTube via a special website [1, 2]. The live performance, which lasted for about seven minutes, was followed by a demonstration of bidirectional communication consisting of conversation between a host at the NTT Pavilion and members of Perfume at Expo’70 Commemorative Park. By experiencing this conversation, one could not help but feel the possibility of conveying all five senses and not just sight and hearing with low latency in future communication by IOWN regardless of the distance between two locations. The live performance of this trial was saved just as it was transmitted on April 2, 2025 so that visitors to Zone 2 of the NTT Pavilion could relive the experience of that day during Expo 2025.

5. Future outlookThe dynamic 3D spatial-data transmission and reproduction technology and vibrotactile sound field presentation technology introduced in this article are promising for application to solutions for stadiums and arenas that aim to deliver a high-presence experience. By enabling measured data to be used in cyberspace, diverse applications will become possible beyond simply experiencing content in the metaverse. We can envision the use of such data in self-driving systems, in the maintenance and management of social infrastructures, and in prediction, analysis, and simulations conducted in cyberspace in a variety of industrial fields. References

|

|||||||||||